TikTok, the fast-growing social network from China, has used unusual measures to protect supposedly vulnerable users. The platform instructed its moderators to mark videos of people with disabilities and limit their reach. Queer and fat people also ended up on a list of „special users“ whose videos were regarded as a bullying risk by default and capped in their reach – regardless of the content.

Documents obtained by netzpolitik.org detail TikTok’s moderation guidelines. In addition we spoke with a source at TikTok who has knowledge of content moderation policies at the video-sharing platform.

The new revelations show how ByteDance, the Beijing-based Chinese technology company behind TikTok, deals with bullying on its platform – and the controversial measures it took against it.

Previously, we examined how TikTok limits reach for political content and how its moderation policies work. We also looked at how the service deals with criticism and competition.

Vulnerable only visible in home country

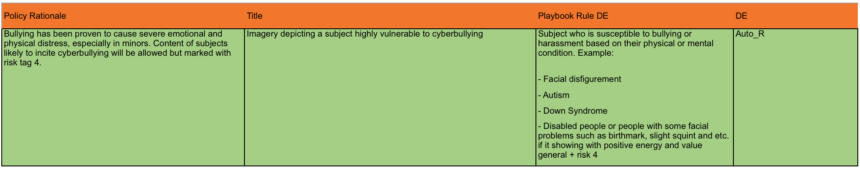

The relevant section in the moderation rules is called „Imagery depicting a subject highly vulnerable to cyberbullying“. In the explanations it says that this covers users who are „susceptible to harassment or cyberbullying based on their physical or mental condition“.

According to the memo, mobbing has negative consequences for those affected. Therefore, videos of such users should always be considered as a risk and their reach on the platform should be limited.

TikTok uses its moderation toolbox to limit the visibility of such users. Moderators were instructed to mark people with disabilities as „Risk 4“. This means that a video is only visible in the country where it was uploaded.

For people with an actual or assumed disability, this means that instead of reaching a global audience of one billion, their videos reached a maximum of 5.5 million people. These are the user numbers TikTok currently has in Germany and globally, according to AdAge magazine.

People with disabilities were kept away from the big stage

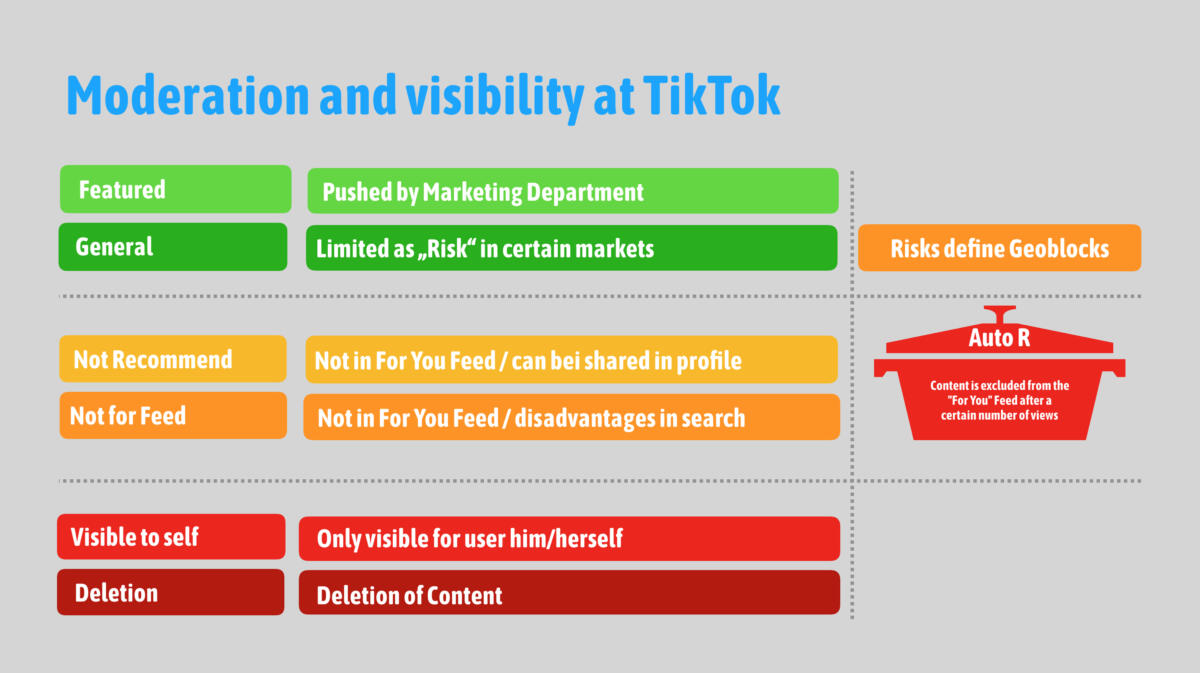

For users that were considered particularly vulnerable, TikTok had even further-reaching regulations. When their videos popped up on the screens of the TikTok moderation teams in Berlin, Beijing or Barcelona – after 6,000 to 10,000 views – they were automatically tagged as „Auto R“.

As a result, if these videos exceeded a certain number of views, they automatically ended up in the „not recommend“ category. Such a categorization means that a video no longer appears in the algorithmically compiled For-You-Feed, which the user sees when opening the app.

Strictly speaking, such videos are not deleted – but in fact they hardly have an audience.

For users hoping for a large audience for their duets, comedy sketches, pranks and dance choreographies, the For-You-Feed is what they aspire to. Those who make it there will play on the big stage and possibly become „TikTok-famous“.

Many users tag their videos with hashtags like #foryou or #fyp. From this stage, TikTok’s instructions to the moderators said, people with disabilities were to be kept away.

The guidelines also gives examples of users for whom this applies: „facial disfigurement,“ „autism,“ and „Down syndrome“. Moderators were supposed to judge whether someone has these characteristics and mark the video accordingly in the review process. On average, they have about half a minute to do this, as our source at TikTok reports.

Recognizing autism based on 15 seconds of video

The rules cause irritation on a very practical level: How is a moderator supposed to recognize whether someone has a disorder from the autistic spectrum based on 15 seconds of video? This instruction is one of several incomprehensible rules that are as confusing to the moderators themselves as to outsiders, our source at TikTok told us.

Even more fundamentally, however, the directive shows ignorance of the debates about the visibility of people with disabilities in the media. These debates have been led over the past few years – driven mainly by the people themselves.

While activists are calling for a barrier-free Internet and visibility, TikTok has deliberately put barriers in place – without those affected suspecting anything.

A twisted idea of care

Constantin Grosch of the organization AbilityWatch describes this procedure as „overriding and exclusionary“. People with disabilities are already under-represented in the media and patronised.

„The regulation listed here transforms this behaviour into new digital platforms in which the visibility of disabled people is deliberately reduced out of misunderstood and unnecessary care“, says Grosch.

Considering that a widespread form of bullying is ghosting – deliberately ignoring another person – Grosch calls this practice devastating. He says measures against cybermobbing must aim at restriction of the acts and not the victims and should apply to all users equally.

Grosch points out that marking people based on disability seems particularly outlandish in Germany. Between 1940 and 1941, the Nazis systematically recorded and murdered more than 70,000 people with physical, mental and spiritual disabilities as part of „Aktion T4“.

The sentiment was shared by Manuela Hannen and Christoph Krachten from Evangelische Stiftung Hephata. The German foundation supports people with disabilities and is currently setting up an inclusive social media team.

„We perceive this as censorship for which there is no basis. It is completely absurd not to punish the trolls, but the victims of cyberbullying“, Hannen and Krachten said.

Inclusion activist Raul Krauthausen tells netzpolitik.org: „Protecting a supposed victim from himself is always difficult. Disabled people are not all the same. Some can deal with it better, others worse – just like non-disabled people.“

PR campaigns for diversity and inclusion

One source familiar with moderation reported that staff repeatedly pointed out the problems of this policy and asked for a more sensitive and meaningful policy.

However, their comments were dismissed by the Chinese decision-makers. The rules were mainly handed down from Beijing. This is largely in line with what the Washington Post learned from former TikTok employees in the USA.

TikTok is currently trying to make nice in Germany, the US and other markets where the app has grown rapidly. The platform has worked towards ostracizing cyberbullying, with Hashtag campaigns on body positivity and respectful behaviour. On Safer Internet Day on February 5, TikTok invited people to „send a strong signal through the platform: A signal for inclusion, diversity and security and against bullying, hate speech or insulting behaviour“.

At that time, moderators were still instructed to drastically limit the reach of people with disabilities and others considered to be at risk of bullying.

The concerns about TikTok are fuelled by the fact that TikTok is much less transparent with its policies than Silicon Valley competitors. For a long time, the company did not revealed anything about the basis on which moderation decisions are made.

Under the recent pressure of public attention, especially with regard to the protests in Hong Kong, TikTok is now trying to clearly distinguish itself from the accusation that the content is directed by the chinese government.

TikTok is operated by the Chinese company ByteDance, currently said to be worth about 75 billion dollars, which is more than Uber and Snapchat combined, reports the Washington Post. Owner of ByteDance is Zhang Yiming, one of China’s richest men.

In 2017, ByteDance bought the popular US video sharing app Musical.ly and placed it under the umbrella of its own brand TikTok. Since then, the app, which is particularly popular among young users, has been growing rapidly. Recently, it is said to have reached the mark of one billion users – a faster growth than any other social media platform before.

TikTok: Never intended as a long-term solution

In the meantime, TikTok also seems to have noticed that the regulations on dealing with bullying were not ideal. A spokeswoman told netzpolitik.org that the rules had been chosen „at the beginning“ to counteract bullying on TikTok.

„This approach was never intended to be a long-term solution and although we had a good intention, we realised that it was not the right approach“. The rules have now been replaced by new, nuanced rules. The technology for identifying bullying has been further developed and users are encouraged to interact positively with each other.

This sounds as if the rules are a relic from the platform’s distant past. However, documents available at netzpolitik.org show that the instructions to moderators were in place at least until September.

TikTok did not want to comment on our further questions as to how the rules came about, during what time they applied and whether disabled people’s associations were consulted for the development of the new rules.

A list of „special users“

In addition to the above rules, TikTok moderators maintained a list of „special users“ who were considered especially vulnerable to bullying. These users were generally rated as a risk and their videos were automatically capped with the „Auto R“ mark so that they did not exceed a certain number of views.

The list names 24 accounts, including people who post videos with hashtags such as #disability or write „Autist“ in their biographies. But the list also includes users who are simply fat and self-confident. A striking number show a rainbow flag in their biographies or describe themselves as lesbian, gay or non-binary.

One of the accounts on the list, „miss_anni21“, belongs to Annika. The 21-year-old works as an educator in a kindergarten and publishes sketches, tributes to her mother, and above all dance videos showing her in full physical exertion. Subtitle: „I love dancing! #confident #me #fatwoman“.

Annika learned from our investigation that TikTok kept her on a special list. „I think it’s discriminatory that people are downgraded,“ she says to netzpolitik.org over the phone. „That’s inhuman.“ She wonders how she ended up on the list in the first place.

Of course, Annika says, there are people who are mentally less stable and cannot cope with hatred. But she insists she is not one of them.

About a year ago, two of Annika’s dance videos went viral. The number of her followers blew up from 800 to 10,000. Today there are more than 22,000 people watching her dance.

With the attention came the hateful comments. „Kill yourself, nobody in the world wants you.“

The negative attention lasted for two to three months, Annika says. „But I’m the kind of person who doesn’t care“.

Annika says this is due to her new audience on TikTok. „People follow me now who see me as a role model. That made me even stronger.“

That TikTok limits her range – and thus supposedly protects her from herself – is unnecessary, she says.

In the past weeks Annika gained 4,000 new followers. She has the impression that her videos are now reaching more people. Has the list been taken out of circulation? TikTok has not responded to our detailed enquiries.

About this research and the sources:

Our knowledge about content moderation at TikTok in Germany is based on hours of conversation between netzpolitik.org and a source that has insight into moderation structures and policy at the company. We verified the identity of the source and its employment contract. We cannot and do not want to describe the source in more detail to protect it.

—

Should you have further information or documents about TikTok, we would love to hear from you. You can also directly send information directly – encrypted if you wish. Do not use e-mail addresses, telephone numbers, networks or company devices for this purpose.

s/Zhang Yimin/Zhang Yiming/

ebenfalls im deutschsprachigen Artikel.

Danke, in beiden Artikeln und bei den Schlagwörtern korrigiert.